The Face2Mesh project is an exploration into the use of Graph Convolutional Neural Networks (GCNN) for generating 3D meshes from single frontal images of faces. The long term goal of this project is to create a non-linear 3D morphable model (3DMM) built from "in-the-wild" photos. This model can be used for a variety of applications, such as facial animation, virtual try-on, and facial recognition. In my case specifically I plan to use this project ot extend my work on Text-Driven Mouth Animations to Text-Driven Facial Animations.

Data Creation

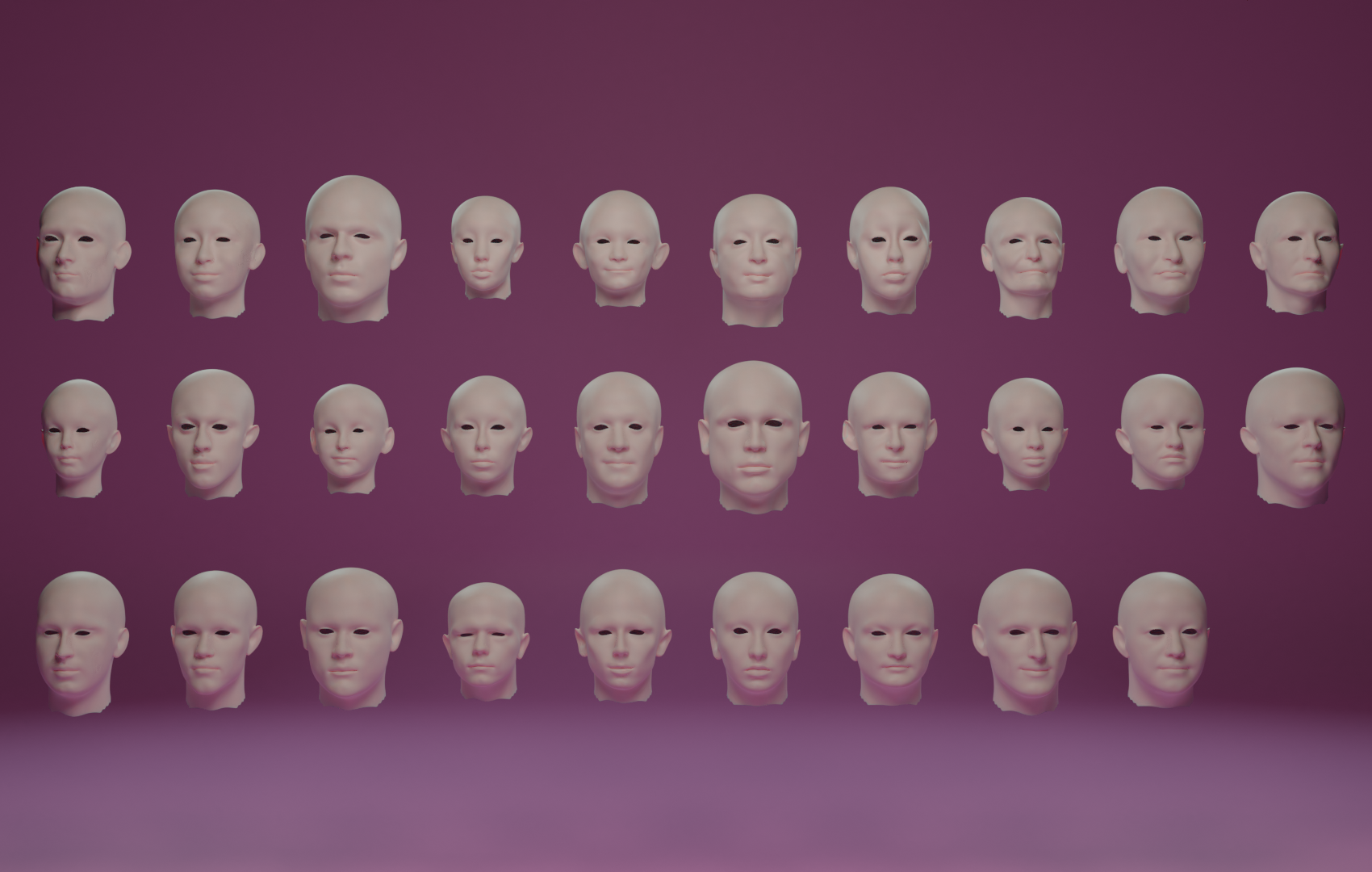

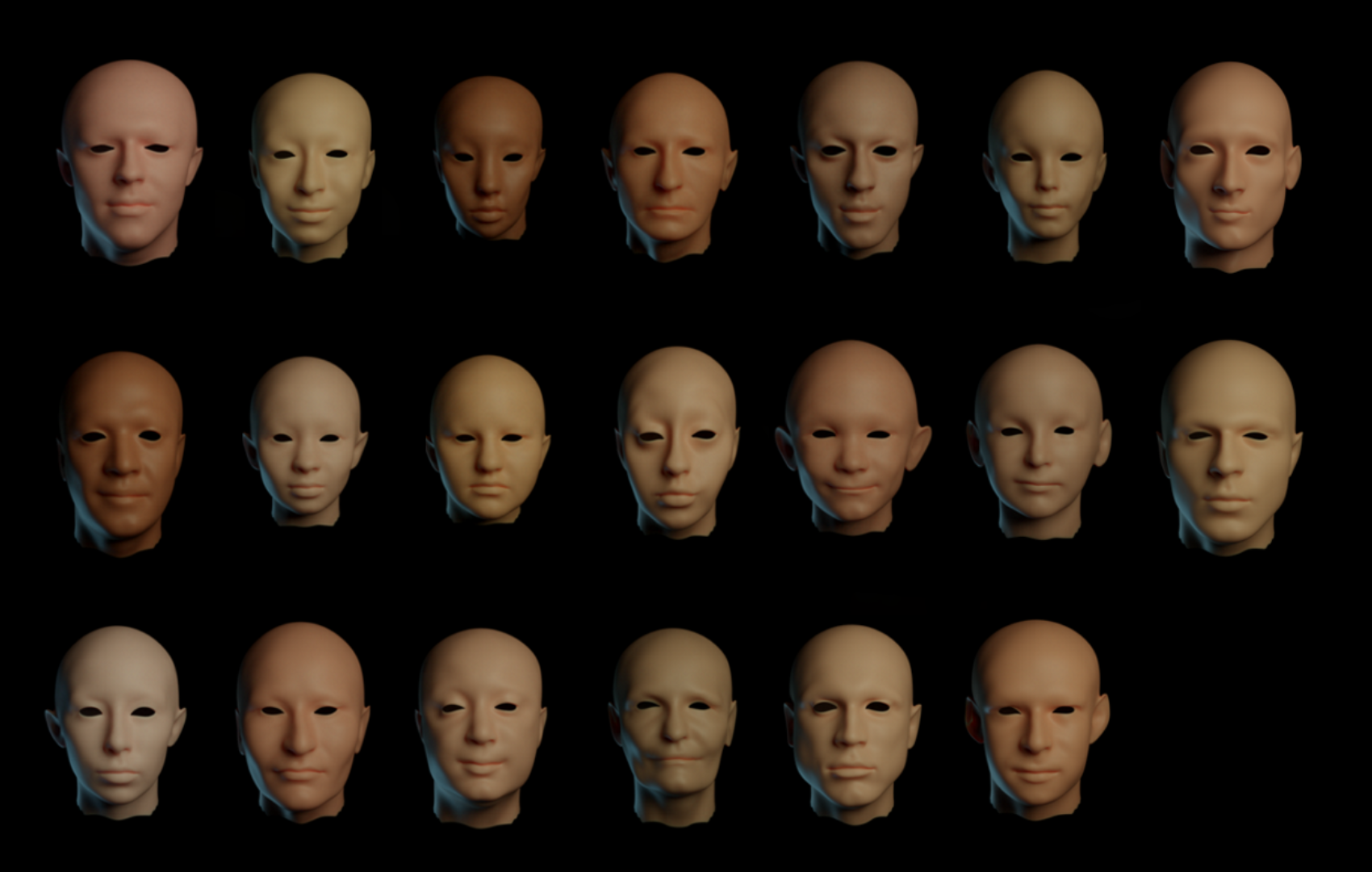

One of the key steps in any machine learning project is creating a high-quality dataset. For the Face2Mesh project, I spent hours recreating and sculpting the faces of my friends and family in Blender. I used the same base mesh for vertex conservation, and the goal was to provide a small, toy dataset for this exploration.

Model Architecture

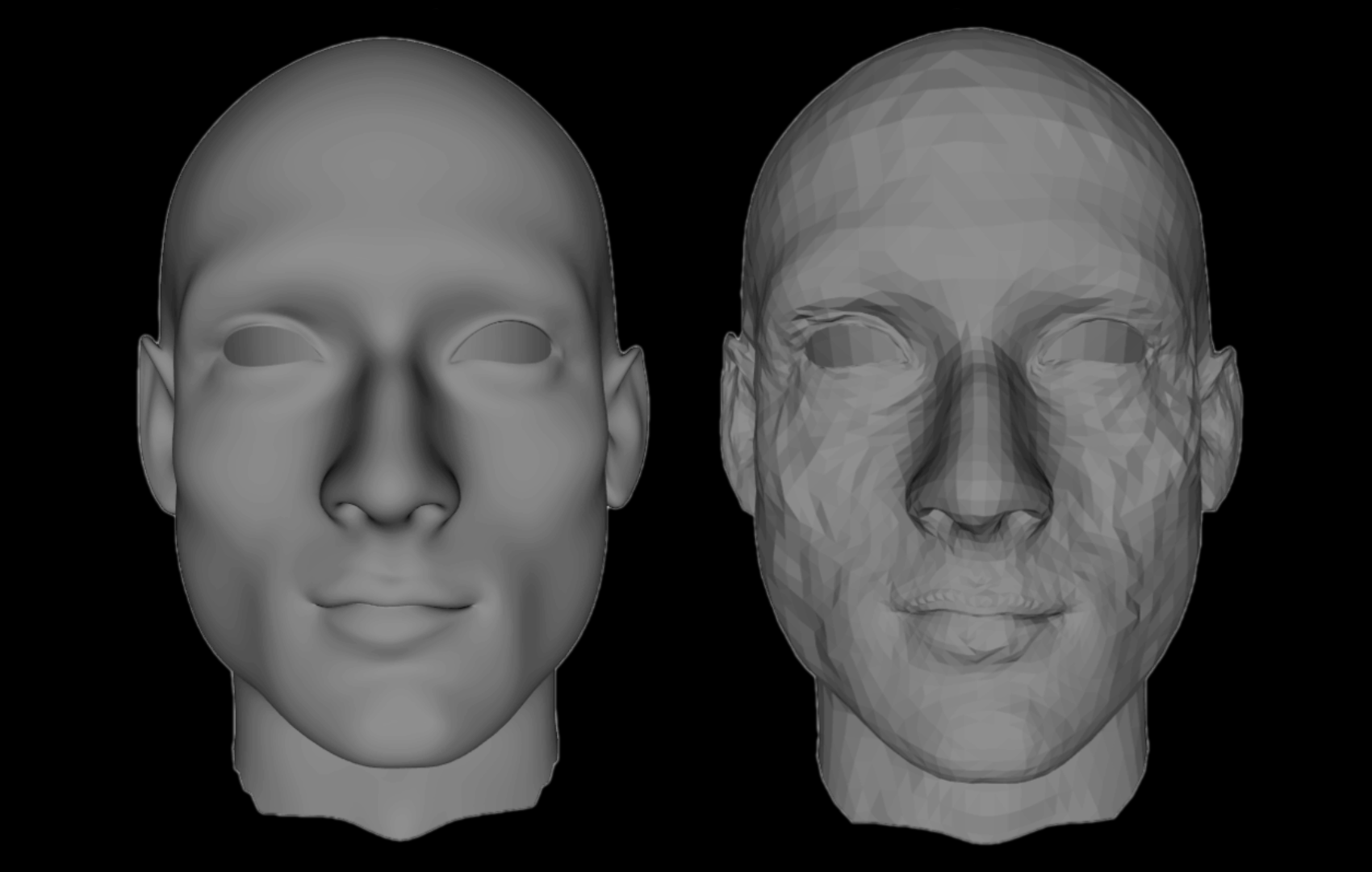

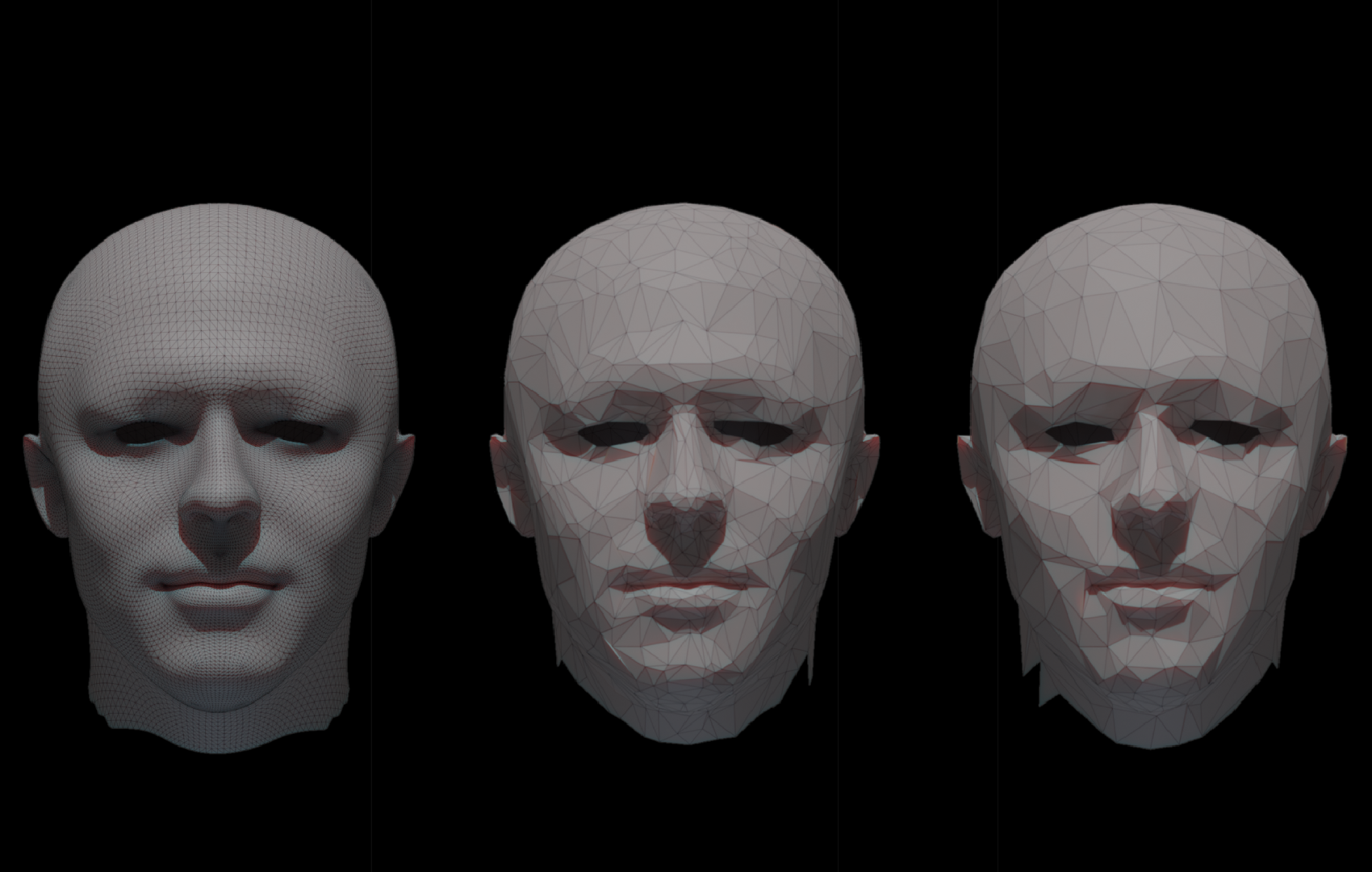

The model architecture used in the Face2Mesh project was built with GCNNs, using Chebyshev Spectral Approximation of a Convolution for Graphs using message passing. Mesh Pooling is used to reduce the dimensionality of the extracted mesh features, which is an equivalent of Max Pooling for Images. It consists of reducing the number of vertices in the mesh while preserving the general shape of the mesh using a quadratic error.

Results

The model was trained on a dataset of 2D facial images and corresponding 3D meshes. During evaluation, the model was able to achieve good reconstruction of the general shape and low-frequency features of the faces. However, there was noise present in the high-frequency details. To mitigate this, a smoothness penalty was added to the objective function, which helped to reduce the noise but resulted in a loss of detail.